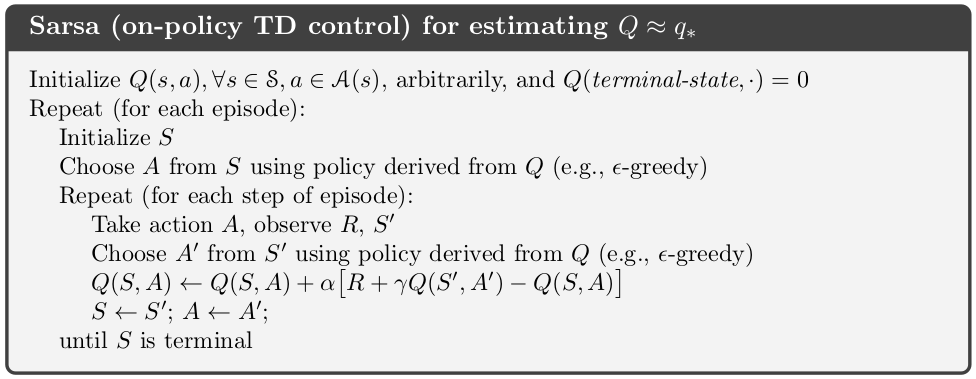

Sarsa Algorithm In Python - Robot Learns To Self Balance With N Step Sarsa Complete Reinforcement Learning Tutorial Youtube : This observation lead to the naming of the learning technique as sarsa stands for state action reward state action which symbolizes the tuple (s, a, r, s', a').

Sarsa Algorithm In Python - Robot Learns To Self Balance With N Step Sarsa Complete Reinforcement Learning Tutorial Youtube : This observation lead to the naming of the learning technique as sarsa stands for state action reward state action which symbolizes the tuple (s, a, r, s', a').. These algorithms, aside from being useful, pull together a lot of the key concepts in rl and so provide a great way to learn about rl more generally. I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why. Expected sarsa technique is an alternative for improving the agent's policy. A machine can be trained to make a sequence of decisions to achieve a definite goal. I also understand how sarsa algorithm works, there're many sites where to find a pseudocode, and i get it.

Discuss the on policy algorithm sarsa and sarsa(lambda) with eligibility trace. As usual, it will follow generalized policy iteration and as td learning in general, it bootstraps. This post show how to implement the sarsa algorithm, using eligibility traces in python. It is part of a serie of articles about reinforcement learning that i will be writing. Expected sarsa technique is an alternative for improving the agent's policy.

I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why.

How to plug in a deep neural network or other differentiable model into your rl algorithm) project: All 13 python 13 jupyter notebook 3 java 2. I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why. As usual, it will follow generalized policy iteration and as td learning in general, it bootstraps. The fundamental reason that anyone uses learning algorithms rather than search algorithms is that search algorithms are too slow once you have too many options for states and actions. Implementing sarsa(λ) in python posted on october 18, 2018. In python, you can think of it as a dictionary with keys as the state and values as the action. Investigate how these two algorithms behave on cliff world (described on page 132 of the textbook) These algorithms, aside from being useful, pull together a lot of the key concepts in rl and so provide a great way to learn about rl more generally. A machine can be trained to make a sequence of decisions to achieve a definite goal. This observation lead to the naming of the learning technique as sarsa stands for state action reward state action which symbolizes the tuple (s, a, r, s', a'). Its update is done using the value of the next state and the action of the current policy. Learn, develop, and deploy advanced reinforcement learning algorithms to solve a variety of tasks ;

In order to know the best action to take from any other state action pair (which is what sarsa calculates), you would need to do a search of the entire graph from. All 13 python 13 jupyter notebook 3 java 2. Take about why he sarsa(lambda) is more efficient.if you like this, please li. Implementing reinforcement learning (rl) algorithms for global path planning in tasks of mobile robot navigation. This post show how to implement the sarsa algorithm, using eligibility traces in python.

As usual, it will follow generalized policy iteration and as td learning in general, it bootstraps.

These algorithms, aside from being useful, pull together a lot of the key concepts in rl and so provide a great way to learn about rl more generally. It is part of a serie of articles about reinforcement learning that i will be writing. Investigate how these two algorithms behave on cliff world (described on page 132 of the textbook) Its update is done using the value of the next state and the action of the current policy. The name sarsa comes from the principle of the algorithm where we use on each step: I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why. I also understand how sarsa algorithm works, there're many sites where to find a pseudocode, and i get it. In this section, we will use sarsa to learn an optimal policy for a given mdp. In order to know the best action to take from any other state action pair (which is what sarsa calculates), you would need to do a search of the entire graph from. Discuss the on policy algorithm sarsa and sarsa(lambda) with eligibility trace. Implementing sarsa(λ) in python posted on october 18, 2018. Reinforcement learning in python implementing reinforcement learning (rl) algorithms for global path planning in tasks of mobile robot navigation. Expected sarsa technique is an alternative for improving the agent's policy.

Reinforcement learning in python implementing reinforcement learning (rl) algorithms for global path planning in tasks of mobile robot navigation. I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why. In this notebook, you will: The fundamental reason that anyone uses learning algorithms rather than search algorithms is that search algorithms are too slow once you have too many options for states and actions. All 13 python 13 jupyter notebook 3 java 2.

Investigate how these two algorithms behave on cliff world (described on page 132 of the textbook)

Implementing sarsa(λ) in python posted on october 18, 2018. Take about why he sarsa(lambda) is more efficient.if you like this, please li. Expected sarsa technique is an alternative for improving the agent's policy. This observation lead to the naming of the learning technique as sarsa stands for state action reward state action which symbolizes the tuple (s, a, r, s', a'). A machine can be trained to make a sequence of decisions to achieve a definite goal. Its update is done using the value of the next state and the action of the current policy. How to plug in a deep neural network or other differentiable model into your rl algorithm) project: I've implemented this algorithm in my problem following all the steps, but when i check the final q function after all the episodes i notice that all values tend to zero and i don't know why. These algorithms, aside from being useful, pull together a lot of the key concepts in rl and so provide a great way to learn about rl more generally. In this notebook, you will: Implementing reinforcement learning (rl) algorithms for global path planning in tasks of mobile robot navigation. As usual, it will follow generalized policy iteration and as td learning in general, it bootstraps. Implementing reinforcement learning (rl) algorithms for global path planning in tasks of mobile robot navigation.

Komentar

Posting Komentar